Table of Contents

Abstract

Background: An essential component of an educational program’s learning environment is its learning orientation. Several instruments attempt to measure the learning environment in graduate medical education (GME) but none focus on learning orientation. Thus, it is challenging to know if competency-based educational interventions, such as Education in Pediatrics Across the Continuum (EPAC), are achieving their objective of supporting mastery learning.

Objective: To revise Krupat’s Educational Climate Inventory (ECI), originally designed for medical students, and determine the modified instrument’s psychometric properties when used to measure the learning orientation in GME programs.

Methods: We included 12 items from the original ECI in our GME Learning Environment Inventory (GME-LEI) and added 10 additional items. We hypothesized a three-factor structure, consisting of two sub-scales from the original ECI (Centrality of Learning also known as Learning Orientation; Competition and Stress) and a Support in the Learning Environment sub-scale. We administered the GME-LEI electronically to all residents (EPAC and traditional) in 4 pediatric GME programs across the United States. We performed confirmatory factor and parallel factor analyses, calculated Cronbach’s alpha for each sub-scale, and compared mean sub-scale scores between EPAC and traditional residents using a two-way analysis of variance.

Results: A total of 127 pediatric residents from 4 participating GME programs completed the GME-LEI. The final three-factor model was an acceptable fit to the data (CFI 0.86, RMSEA 0.07). Cronbach’s alpha for each sub-scale was acceptable (Centrality: 0.87, 95% CI 0.83, 0.9; Stress: 0.73, 95% CI 0.66, 0.8; Support: 0.77, 95% CI 0.71, 0.83). Mean scores on each sub-scale varied by program type (EPAC vs traditional) with EPAC residents reporting significantly higher scores in the Centrality of Learning sub-scale (2.03, SD 0.30, vs 1.79, SD 0.42; p=.023)

Conclusions: Our analysis suggests that the GME-LEI reliably measures three distinct aspects of the GME learning environment. GME-LEI scores are sensitive to detecting differences in the centrality of learning, or learning orientation, of EPAC and traditional pediatric residents. The GME-LEI can be used to identify areas of improvement in GME and help programs better support mastery learning of residents.

Introduction

The learning environment, or the setting in which learning happens, powerfully influences learners’ educational experience. Perceptions of an environment that supports learning are associated with higher levels of learner achievement,1 greater motivation for learning,2 lower rates of learner burnout,3-5 and improved patient experiences.6 The critical influence of the learning environment is noted by accrediting bodies such as the Liaison Committee on Medical Education7 and the Accreditation Council for Graduate Medical Education.8,9 Both require medical education programs to monitor their learning environments.

An essential component of the learning environment is the learning orientation that the program fosters.1 One way to conceptualize learning orientation is using Dweck’s conceptual framework of a growth versus a fixed mindset.10,11 A growth mindset, or mastery orientation, focuses on increasing learners’ knowledge and skills, critical thinking, and self-directed learning, all of which are essential to the practice medicine.12 Uncertainty and learning from mistakes are valued with growth fostered through constructive feedback. In contrast, a fixed mindset, or performance orientation, focuses on measuring and documenting learners’ achievement. Mistakes are viewed as failures and feedback is avided because it critiques performance.

In undergraduate medical education (UME), a variety of instruments exist to assess the learning environment;13-19 however, many of these instruments are of mixed quality and are often not informed by conceptual or theoretical frameworks.20,21 Furthermore, only one of these instruments, the Educational Climate Inventory (ECI) developed by Krupat et al,16 focuses specifically on a mastery versus performance orientation. With its UME focus, we will refer to this instrument as the UME-ECI. The UME-ECI allows educators to assess students’ perceptions of the learning orientation fostered by medical school and per the authors “has potential as an evaluation instrument to determine the efficacy of attempts to move health professions education toward learning and mastery”.16 Importantly, unlike many other instruments which measure elements of the UME learning environment, the UME-ECI meets many of the criteria for learning environment assessment.20

Compared to UME, fewer instruments exist to measure the learning environment in graduate medical education(GME).22-26 Twoof the most frequently used instruments developed by Boor et al.22 and Roff et al.25 are well validated but focus on teacher-learner relationships and supervision of learners without addressing the learning orientation. Given the importance of learning orientation as part of an institution’s learning environment, and the lack of validated instruments which measure learning orientation in GME, we adapted the UME-ECI for the GME setting and created a new instrument, the GME Learning Environment Inventory (GME-LEI). We set out to collect reliability and validity evidence for its use in GME and to answer two research questions:

1. Will the GME-LEI prove to have similar psychometric properties as the UME-ECI, including sub-scale structure and sub-scale reliability?

2. Do resident physicians enrolled in the Education in Pediatrics Across the Continuum (EPAC) program, a competency-based medical education program that is known to foster a mastery learning orientation, score differently on the GME-LEI compared to residents in traditional pediatric residencies?

In this article, we describe the development of the GME-LEI and present the outcomes of the data we collected using the adapted instrument to address our research questions.

Methods

Instrument Development and Administration:

To measure the learning orientation within GME programs consisting of both EPAC and traditional pediatric residents, we revised the UME-ECI in a stepwise fashion. We solicited the input of the EPAC steering committee, which consists of 4 to 5 representatives of each EPAC school and 4 to 6 consultants funded to guide EPAC by the Association of American Medical Colleges (AAMC), during semi-annual face-to-face meetings. Based on the iterative discussions of this group, we retained most items for 2 of the 3 UME-ECI sub-scales to be used in the GME-LEI: Centrality of Learning (7 of 10 items) and Competitiveness and Stress in the Learning Environment (5 of 6 items).

Centrality of Learning focuses on learning orientation (i.e., learning vs mastery) in the residency program while Competitiveness and Stress in the Learning Environment focuses on how programs create stress and competitiveness in the learning environment. We omitted all items in the UME-ECI’s Passivity and Memorization sub-scale as they were more oriented to classroom or preclinical learning, and we perceived them to have little relevance to learning in the clinical environment. We replaced this sub-scale with a new Support of Learning sub-scale, and included 6 items pertaining to supervisory relationships, autonomy, and longitudinal experiences, elements we determined to be important in the context of the clinical environment. For example, we asked about ability to assume higher levels of responsibility when residents feel ready and about the programs fostering long-term relationships with attendings.

Members from the national EPAC steering committee reviewed each item in the GME-LEI to ensure clarity of the language for residents and to ensure face validity when used in a GME setting. During this review process we replaced, for example, ‘faculty’ with ‘supervisor’ and ‘students’ with ‘residents’. To optimize clarity even further, we added 2 items in the Centrality of Learning sub-scale to make explicit reference to trust and partnering within interprofessional healthcare teams. The final GME-LEI instrument contained 22 items, organized into the 3 sub-scales (Centrality of Learning = 11, Competitiveness and Stress in the Learning Environment=6, Support of Learning=5). Items were designed such that participants could choose one response on a four-point Likert scale, which ranged from “strongly agree” to “strongly disagree.”

Participants:

We sent the GME-LEI electronically to all residents (EPAC and traditional) in 4 pediatric GME programs across the United States. EPAC is a pilot program designed to advance trainees through medical school and pediatric residency training based on competence, as opposed to time-in-training. What sets EPAC apart is its use of Entrustable Professional Activities (EPAs)27,28 as an assessment framework and entrustment supervision scales to track trainees’ progress and determine their readiness for advancement. Specific details of EPAC’s assessment process have been reported previously.28 Trainees enrolled in EPAC also take part in additional longitudinal experiences compared to trainees in traditional UME and GME programs. These additional longitudinal experiences, which, combined with the EPA evaluation framework, is intended to help foster a mastery orientation in their respective programs.29

Instrument Administration and Data Collection:

We administered the GME-LEI electronically between December 2019 and April 2020, using the Association of Pediatrics Program Directors LEARN Coordinating Center,30 an existing platform that was part of a larger pediatrics educational research network. We assigned each respondent a unique identifier so that responses were anonymous but were linked to the individual’s participating institution, year of training, and EPAC status. As responses to the survey were part of a program evaluation, all residents were expected to participate, but in an anonymous manner so that deciding not to participate cannot be tracked nor result in any negative consequence. Distribution of the instrument varied by program, with some programs providing an electronic survey link to each resident during their semi-annual meeting with the residency program director, while other programs sent out the survey link to all residents via email. All responses were stored in the APPD LEARN Coordinating Center database. Data were collected as part of an EPAC program evaluation, and thus the data collection and analysis were determined by the IRB at each participating institution to be exempt human subjects research.

Data Analysis:

We first performed a confirmatory factor analysis (CFA) on the items retained from the UME-ECI to ensure that the expected two-factor structure was present. We then fitted a three-factor CFA model to all GME-LEI responses. We allowed the 2 additional Centrality of Learning items to load on any of the three factors, hypothesizing that they would load only on Centrality of Learning. We assigned the new Support of Learning items to a third factor and examined modification indices to determine whether any of the Support of Learning items would be better placed in one of the original factors than in the new factor. Finally, we performed a check for unidimensionality on each sub-scale by conducting a parallel exploratory factor analysis to evaluate the fit of a single underlying factor for each sub-scale, and then calculated Cronbach’s alpha for each sub-scale to summarize its interitem reliability.

The second part of the analysis focused on using the instrument to answer the second research question. We calculated separate mean scores and standard deviations for residents in each program site, and each program type, EPAC vs traditional. Within the Competitiveness and Stress sub-scale, one item, which was worded positively, was reverse scored resulting in a scale where higher scores indicated more competitiveness and stress. One item in the Support of Learning sub-scale was negatively worded, and thus was reverse scored resulting in a scale where higher scores indicated more support. All items in the Centrality of Learning sub-scale were positively worded and thus higher scores indicated a more mastery focused learning orientation.

We compared mean scores across groups using a two-way analysis of variance. Data analysis was conducted using R 3.6 (R Core Team, Vienna, Austria). We used two-sided hypothesis tests and considered p values less than .05 significant.

Results

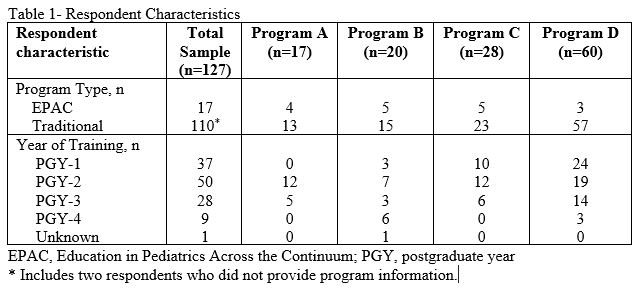

A total of 127 pediatric residents from 4 participating GME programs completed the GME-LEI. The responses rates of program sites A-D were 53%, 18%, 35%, and 67% respectively with a total response rate of 40% across sites.Respondents’ characteristics are described in Table 1; two responses did not include program information and thus were not used in the CFA.

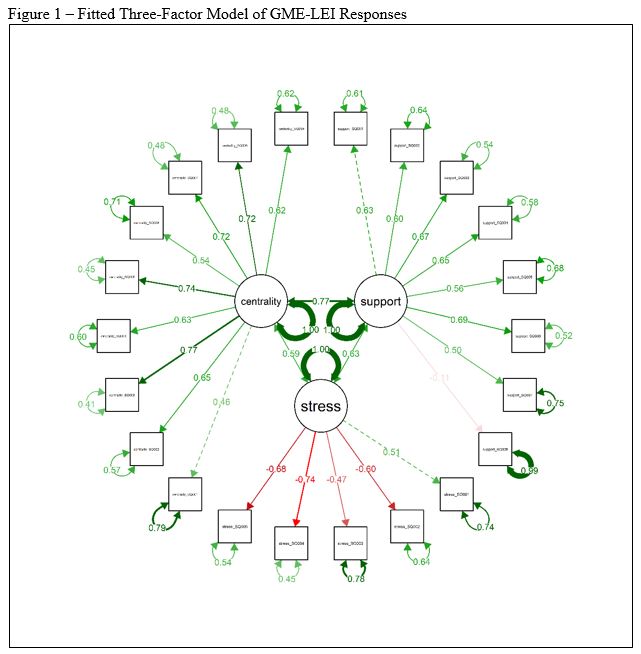

In response to our first research question, the items from the UME-ECI were well-characterized by a two-factor model (CFI 0.94, RMSEA 0.06, Supplementary Appendix 1). In the three-factor model, the two new items added to the GME-LEI related to relationships with interprofessional healthcare team members loaded significantly only on to the Centrality of Learning factor (Supplementary Appendix 2). All but one of the new items included in the GME-LEI’s Support of Learning sub-scale loaded significantly on to a third factor, and model fit could not be improved by moving them to any other sub-scale. The final three-factor model was an acceptable fit to the data (CFI 0.86, RMSEA 0.07, Figure 1).

A parallel factor analysis of each sub-scale suggested that each was best interpreted as unidimensional (Supplementary Appendix 3). Cronbach’s alpha for each sub-scale was acceptable (Centrality: 0.87, 95% CI 0.83, 0.9; Stress: 0.73, 95% CI 0.66, 0.8; Support: 0.77, 95% CI 0.71, 0.83).

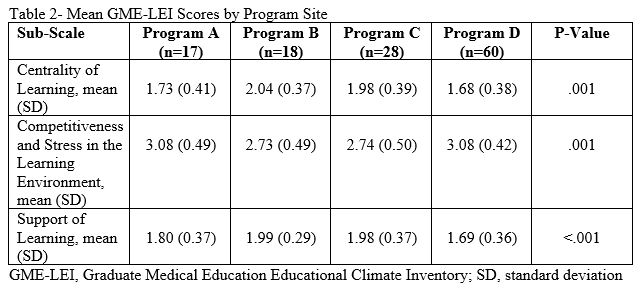

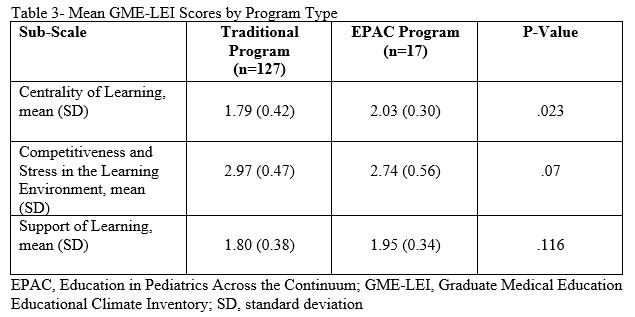

In response to research question 2, respondents’ mean score on each sub-scale varied by program site (Table 2) and program type, EPAC vs traditional (Table 3). EPAC residents had significantly higher scores on the Centrality of Learning sub-scale (2.03, SD 0.30, vs 1.79, SD 0.42; p=.023) suggesting a more mastery focused learning orientation compared to residents in traditional programs.

Discussion

In this paper, we describe our revision of an existing instrument used to measure the learning orientation in UME programs. We also describe our attempt to provide reliability and validity evidence for this revised instrument, the GME-LEI, that measures the learning environment in GME programs and attends to learning orientation. The need for an instrument that measures learning orientation is pressing as enthusiasm and evidence for mastery orientation grows and remains pivotal to national accreditation. Our analysis suggests that the GME-LEI reliably measures three distinct aspects of the learning environment in GME programs: Centrality of Learning, Competitiveness and Stress in the Learning Environment, and Support of Learning. We confirmed items contained within the GME-LEI were well characterized by this three-factor model through a CFA and calculated Cronbach alpha values that support the reliability of our new instrument. With its focus on learning orientation, the GME-LEI is unique among learning environment instruments in GME and helps to fill a gap in the literature and practice of measuring GME learning environments.

Our analysis found that in the Centrality of Learning sub-scale, EPAC residents tended to have higher mean scores than traditional residents. This suggests that the GME-LEI may be sensitive to detecting differences in learning orientation of EPAC and traditional pediatric residents. EPAC was intentional about advancing trainees based on competency, not time-in-training. In the EPAC program, students exhibited self-directed learning (e.g., actively seeking opportunities to engage in patient care) and action-oriented discernment (e.g., actively seeking constructive feedback).29 These behaviors, in addition to the unadjusted difference detected in GME-LEI, suggest that EPAC may foster a learning environment that supports mastery learning. Thus, the GME-LEI could be a useful tool in further elucidating the impact of competency based medical education on micro-levels of learning.31

Limitations Our study has several important limitations to consider including the relatively small sample size and variable numbers of respondents at each of the four program sites. Both of these factors impacted our ability to adjust for potential confounders which may have affected the mean scores on the GME-LEI. The GME-LEI was administered over a period of 5 months. Learning environments are not static and it could be that residents’ perceptions in December were different than their perceptions in April. Finally, we revised the existing UME-ECI and modified it for the primary purpose of program evaluation, not research. Thus, the GME-LEI was not extensively piloted prior to administration.

Conclusion

The GME-LEI reliably measures three distinct aspects of the GME learning environment. As its scores may be sensitive to detecting differences in learning orientation, the GME-LEI could be used to identify areas of improvement and help GME programs better support mastery learning.

References

- Genn JM. AMEE Medical Education Guide No. 23 (Part 2): Curriculum, environment, climate, quality and change in medical education – a unifying perspective. Med Teach. 2001;23(5):445-454.

- Delva MD, Kirby J, Schultz K, Godwin M. Assessing the relationship of learning approaches to workplace climate in clerkship and residency. Acad Med. 2004;79(11):1120-1126.

- Billings ME, Lazarus ME, Wenrich M, Curtis JR, Engelberg RA. The effect of the hidden curriculum on resident burnout and cynicism. J Grad Med Educ. 2011;3(4):503-510.

- Llera J, Durante E. Correlation between the educational environment and burn-out syndrome in residency programs at a university hospital. Arch Argent Pediatr. 2014;112(1):6-11.

- Sum MY, Chew QH, Sim K. Perceptions of the Learning Environment on the Relationship Between Stress and Burnout for Residents in an ACGME-I Accredited National Psychiatry Residency Program. J Grad Med Educ. 2019;11(4 Suppl):85-90.

- Smirnova A, Arah OA, Stalmeijer RE, Lombarts K, van der Vleuten CPM. The Association Between Residency Learning Climate and Inpatient Care Experience in Clinical Teaching Departments in the Netherlands. Acad Med. 2019;94(3):419-426.

- Liaison Committee on Medical Education (2019). Function and structure of a medical school: Standards for accreditation of medical education programs leading to the M.D. degree. 2019; http://lcme.org/wp-content/uploads/filebase/standards/2020-21_Functions-and-Structure_2019-05-01.docx.

- Weiss KB, Bagian JP, Wagner R. CLER Pathways to Excellence: Expectations for an Optimal Clinical Learning Environment (Executive Summary). J Grad Med Educ. 2014;6(3):610-611.

- Weiss KB, Bagian JP, Wagner R, Nasca TJ. Introducing the CLER Pathways to Excellence: A New Way of Viewing Clinical Learning Environments. J Grad Med Educ. 2014;6(3):608-609.

- Dweck CS, Mangels JA, Good C. Motivational effects on attention, cognition, and performance. Motivation, emotion, and cognition: Integrative perspectives on intellectual functioning and development. Mahwah, NJ, US: Lawrence Erlbaum Associates Publishers; 2004:41-55.

- Wolcott MD, McLaughlin JE, Hann A, et al. A review to characterise and map the growth mindset theory in health professions education. Med Educ. Apr 2021;55(4):430-440. doi:10.1111/medu.14381

- Richardson D, Kinnear B, Hauer KE, et al. Growth mindset in competency-based medical education. Med Teach. Jul 2021;43(7):751-757. doi:10.1080/0142159x.2021.1928036

- Chan CYW, Sum MY, Tan GMY, Tor PC, Sim K. Adoption and correlates of the Dundee Ready Educational Environment Measure (DREEM) in the evaluation of undergraduate learning environments – a systematic review. Med Teach. Dec 2018;40(12):1240-1247. doi:10.1080/0142159x.2018.1426842

- Eggleton K, Goodyear-Smith F, Henning M, Jones R, Shulruf B. A psychometric evaluation of the University of Auckland General Practice Report of Educational Environment: UAGREE. Educ Prim Care. Mar 2017;28(2):86-93. doi:10.1080/14739879.2016.1268934

- Irby DM, O’Brien BC, Stenfors T, Palmgren PJ. Selecting Instruments for Measuring the Clinical Learning Environment of Medical Education: A 4-Domain Framework. Acad Med. Feb 1 2021;96(2):218-225. doi:10.1097/acm.0000000000003551

- Krupat E, Borges NJ, Brower RD, et al. The Educational Climate Inventory: Measuring Students’ Perceptions of the Preclerkship and Clerkship Settings. Acad Med. Dec 2017;92(12):1757-1764. doi:10.1097/acm.0000000000001730

- Marshall RE. Measuring the medical school learning environment. J Med Educ. Feb 1978;53(2):98-104. doi:10.1097/00001888-197802000-00003

- Pololi L, Price J. Validation and use of an instrument to measure the learning environment as perceived by medical students. Teach Learn Med. Fall 2000;12(4):201-7. doi:10.1207/s15328015tlm1204_7

- Shochet RB, Colbert-Getz JM, Wright SM. The Johns Hopkins learning environment scale: measuring medical students’ perceptions of the processes supporting professional formation. Acad Med. Jun 2015;90(6):810-8. doi:10.1097/acm.0000000000000706

- Schönrock-Adema J, Bouwkamp-Timmer T, van Hell EA, Cohen-Schotanus J. Key elements in assessing the educational environment: where is the theory? Adv Health Sci Educ Theory Pract. 2012;17(5):727-742.

- Boor K, Van Der Vleuten C, Teunissen P, Scherpbier A, Scheele F. Development and analysis of D-RECT, an instrument measuring residents’ learning climate. Med Teach. 2011;33(10):820-827.

- Cannon GW, Keitz SA, Holland GJ, et al. Factors determining medical students’ and residents’ satisfaction during VA-based training: findings from the VA Learners’ Perceptions Survey. Acad Med. Jun 2008;83(6):611-20. doi:10.1097/ACM.0b013e3181722e97

- Pololi LH, Evans AT, Civian JT, Shea S, Brennan RT. Assessing the Culture of Residency Using the C – Change Resident Survey: Validity Evidence in 34 U.S. Residency Programs. J Gen Intern Med. Jul 2017;32(7):783-789. doi:10.1007/s11606-017-4038-6

- Roff S, McAleer S, Skinner A. Development and validation of an instrument to measure the postgraduate clinical learning and teaching educational environment for hospital-based junior doctors in the UK. Med Teach. Jun 2005;27(4):326-31. doi:10.1080/01421590500150874

- Schönrock-Adema J VM, Raat AN, Brand PL. Development and validation of the Scan of Postgraduate Educational Environment Domains (SPEED): A brief instrument to assess the educational environment in postgraduate medical education.: PLoS One.; 2015.

- Andrews JS, Bale JF, Jr., Soep JB, et al. Education in Pediatrics Across the Continuum (EPAC): First Steps Toward Realizing the Dream of Competency-Based Education. Acad Med. Mar 2018;93(3):414-420. doi:10.1097/acm.0000000000002020

- Murray KE, Lane JL, Carraccio C, et al. Crossing the Gap: Using Competency-Based Assessment to Determine Whether Learners Are Ready for the Undergraduate-to-Graduate Transition. Acad Med. Mar 2019;94(3):338-345. doi:10.1097/acm.0000000000002535

- Caro Monroig AM, Chen HC, Carraccio C, Richards BF, Ten Cate O, Balmer DF. Medical Students’ Perspectives on Entrustment Decision-Making in an EPA Assessment Framework: A Secondary Data Analysis. Acad Med. 2020.

- Schwartz A, Young R, Hicks PJ; for APPD LEARN. (2014). Medical Education Practice-Based Research Networks: Facilitating collaborative research. Medical Teacher, 38:1, 64-74.

- Ten Cate O, Balmer DF, Caretta-Weyer H, Hatala R, Hennus MP, West DC. Entrustable Professional Activities and Entrustment Decision Making: A Development and Research Agenda for the Next Decade. Acad Med. 2021 Jul 1;96(7S):S96-S104. doi: 10.1097/ACM.0000000000004106. PMID: 34183610.

Return to Table of Contents: 2022 Journal of the Academy of Health Sciences: A Pre-Print Repository

Measuring the Learning Orientation Fostered by Pediatric Residency Programs: Adapting an Instrument Developed for UME by Jonathan Sawicki, MD, Boyd F. Richards, PhD, Alan Schwartz, PhD & Dorene Balmer, PhD